Amore is a virtual world that aims to fundamentally change how people meet, interact, and love one another via fully customizable 3D avatars. The avatars move when you move, speak when you speak, and can even express emotions. In the world of Amore, anything is possible. You can dance the night away in the nightclub, participate in meditations, and even meet your soulmate, all while in the comfort of your own home. You can also improve your social skills and rid yourself of your social anxiety by interacting with the Amore AI. People need a new world to escape to, and Amore provides exactly that. There are events such as movies, virtual speed dating, and more. Users can draw together, view 360 content, play sports, and even take selfies. Conventional virtual worlds allow for people of all ages to communicate, but miss the mark when it comes to forming intimate relationships. That is why it is an adult-only virtual world, and how the name came to be.

EngageAR is an augmented reality conversational demo developed using ARCore and IBM Watson. The app allows you to actually talk with a virtual person just as if you were actually talking to someone in real life. Using the power of ARCore, users can spawn the person anywhere they want and then walk around them as if they were actually there. The virtual person will face the user no matter where they are. Using IBM Watson, users can speak into their phone to “talk” to the person and actually hear them respond back, simulating a real life conversation. All of this is possible using your existing Android smartphone and the EngageAR app. Download the Unity project and try it for yourself by clicking the image above!

SolAR is a solar system visualizer for the Meta 2 augmented reality headset. The application allows you to actually view the different planets in the solar system in 3D, right before your eyes. Users can reach their hands out and swipe to change planets, make a fist to grab the planet, rotate their hands to rotate the planet, and move their hands closer/farther away from each other to make the planets bigger or smaller. In addition, if the user looks slightly down they will see a panel with information about the current planet that is being viewed and can swipe on the panel to scroll up and down. There is a narrator who automatically reads the information presented and background music as well. Stars surround the user and each planet includes high resolution textures for added realism. The application was developed for the purpose of showing how augmented reality technology can make science education more immersive.

EngageVR is a virtual reality application meant to improve the social skills of the user by allowing them to talk to three different women, each with their own backgrounds and appearances. The user can ask these women basic questions about themselves (occupation, hobby, age, location, etc) and answer questions as well. The female makes eye contact with the user and actually moves her mouth like she is speaking. The user can choose between three different environments (Tavern, Cafe, and Restaurant) and can pick things up around them. If they look down, they have full body presence and they feel as if they are actually in the experience. The app is meant to deliver a level of presence that allows the user to actually engage in a real conversation. It is currently in development and uses SteamVR for the virtual reality capabilities as well as IBM Watson for the artificial intelligence capabilities.

Time Capsule VR allows you to experience what it is like to live someone else's life in virtual reality. It achieves this by taking you through different stages of the life of Mr. Byrd, and each stage requires you to do different things in order to progress to the next one. It utilizes the Google Speech API for voice recognition on Android. Time Capsule VR was developed for the Oculus Go and Samsung Gear VR, and uses the remote to allow you to point at the floor to teleport around and point at objects to grab them. The app was developed for my Capstone project as part of the Udacity VR Developer Nanodegree where I was required to meet a certain amount of achievements in order to pass. The achievements that the app met were gamification, physics, video player, locomotion, empathy, animation, lighting, scale, and speech recognition.

Thalassus VR was an app developed for the Storyteller’s Revenge project as part of the VR Developer Nanodegree from Udacity. Thalassus means “Sea” in Greek. The project taught me how to stitch together 180 degree videos in Autopano Video as well as edit the video in Adobe Premiere. Users find themselves on a Ferry and after a period of time a Pirate Ship. They can switch between the two boats by looking at a button and pressing the trigger which skips to a specific time in the video, restart the experience by doing the same, as well as play/pause the video at will. The experience was created for the Google Cardboard.

Virtual Tourist is a VR application that I developed for my Night at the Museum project as part of Term 2 of the Udacity VR Nanodegree program. The software enables you to learn about any of the 6 featured destinations and even visit them in virtual reality. These destinations are: Paris, London, San Francisco, Rome, Las Vegas, and New York. I picked these places from a list of the most popular vacation spots that I found via a Google search. I created the app using the Unity game engine, and it requires the user to wear a Google Cardboard on their head.

Puzzler VR was an app I developed for the Udacity VR Developer Nanodegree Puzzler Project. The app allowed you to solve a puzzle using your eyes to gaze and a button to click. You had to click the orbs in the correct order in order to solve the puzzle. It was developed for the Google Cardboard.

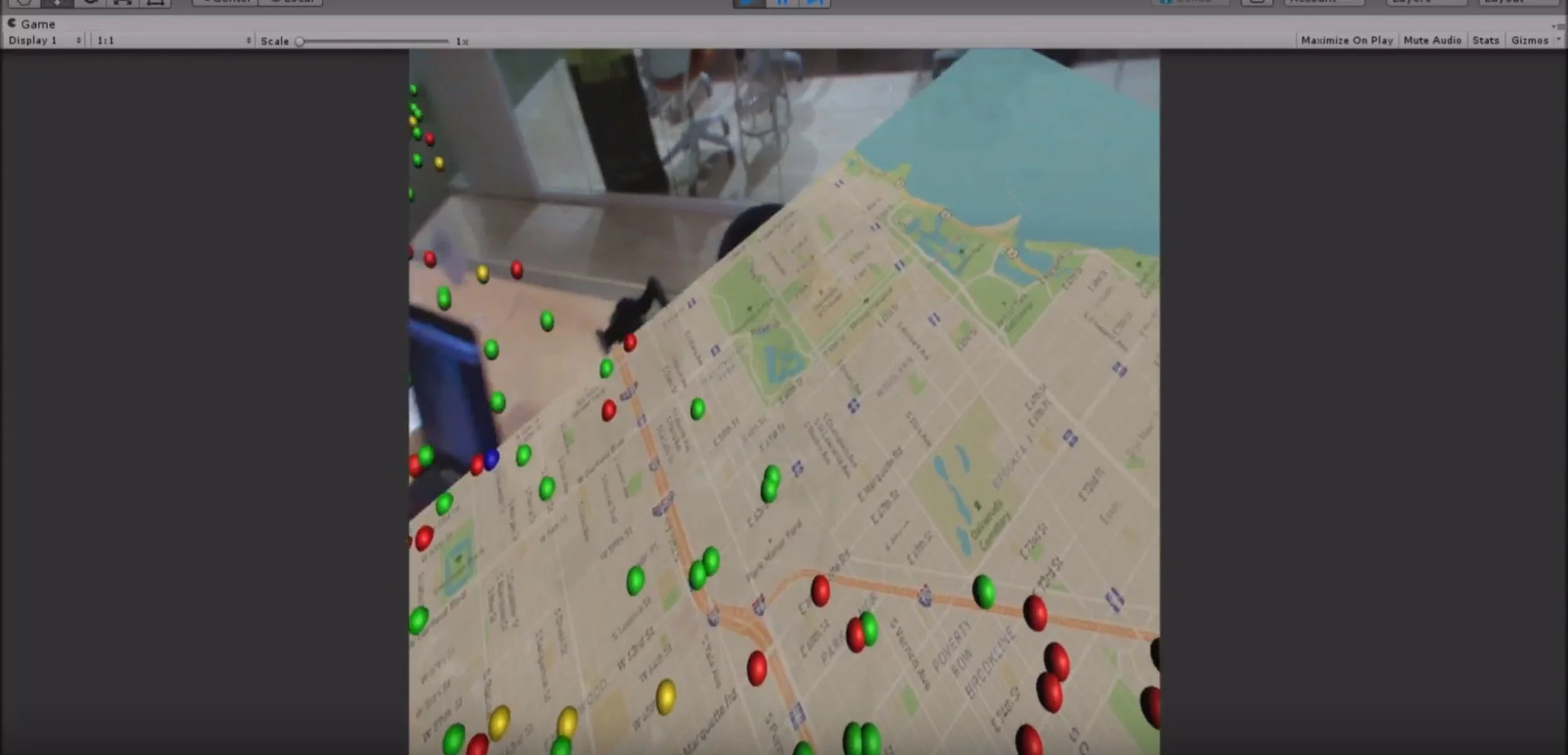

Meta Data allows the user to explore collections of data about incidents of homicide and other capital crimes in a geo-located environment. Drilling down into a specific incident gives the user a street-level perspective of the scene of the crime and additional information about the event drawn from many publicly available sources. The app was developed for the Reality Virtually hackathon 2017.

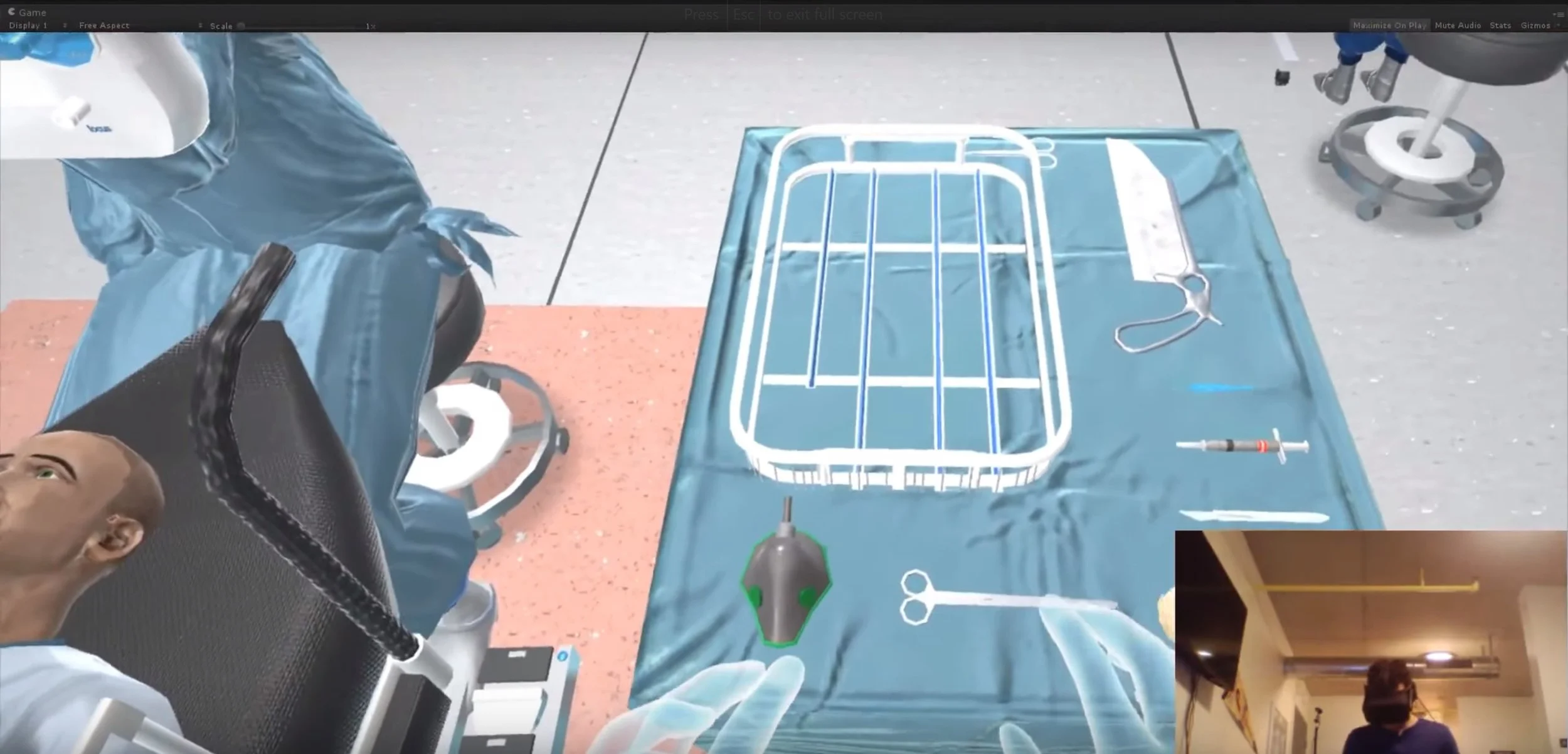

SimulatOR was an application developed by myself as well as a team of doctors at Philly Codefest 2017 that trained medical professionals to accurately and safely perform the World Health Organization Surgical Safety Checklist. The app utilized speech recognition to talk to the patient as well as report information such as verifying the quantity of tools available in the room. It also allowed users to walk around by teleporting around the OR, as well as grab things by reaching their hands out and pressing the trigger. It was built for the Oculus Rift and integrated the Oculus Touch for hand interactions. We were awarded first place for the Accelerate Health Tech Award as well as the grand prize for the Best Collaborative Hack for our efforts.

StudyVR is a learning tool for grade school kids to connect with their learning concepts with the HTC Vive, using kinesthetic hands-on interaction to engage them on a deeper level and bring science to life. The user starts out in the hub where they see books around them. If they lift the books up and put their heads inside the portal in the book, they will enter that world. The application took advantage of the haptics included in the HTC Vive controllers to accurately simulate pressure by moving the controllers closer together or farther apart. I created this application with a group of students from Drexel University for the Reality Virtually hackathon at MIT and we won first place for Best Human Well Being app.

SpeakAR is an application for the Microsoft Hololens that listens to speech around the user and translates it into a language the user can understand. Especially for deaf users, the visual ASL translations are helpful because that is often their native language. I created the application along with three others in under 24 hours at PennApps XIV at UPenn. We utilized Unity's built-in dictation recognizer API to convert spoken word into text. Then, using a combination of gifs and 3D-modeled hands, we translated that text into ASL. The scripts were written in C# and we won the Grand Prize for this innovative approach to real-time speech visualization.

Physical Therapy in Virtual Reality, or PTVR for short, is an application that allows stroke victims to strengthen their motor skills through a set of interactive exercises. Developed for the Leap Motion and Oculus DK2 using Unity, users are able to visualize their own hands in virtual reality. The application allows the user to play several short training exercises that include connect-the-dots and darts, improving things such as their range of motion, reaction time, and cognitive ability. At the end of each level, a text message is sent through the Twilio API to the patient's physical therapist to track their progress. This application was created in less than 24 hours, and for our efforts we were awarded with 2nd Place for Best Medical Hack by Merck at HackRU Spring 2016.

My team and I won the award for Best First Hack at HackTCNJ 2016 for "REALTOVR". My group of 5 individuals, in 24 hours, created a virtual reality application which would allow real estate firms to broaden their market scope. This application utilized Oculus Rift and Leap Motion (integrating the latest Orion update). The software would allow people who are looking to purchase homes out of state or internationally to visualize potential home layouts, and fully customize them.

VirtuWalk allows people with any kind of movement disability to actually feel as if they are walking again. Using the Samsung Gear VR, users are immersed in another world and when they look down, they can see their body. They can walk around, run, and even jump. There are two modes: Practice and Free Roam. Practice is for people who want to get used to the controls. Their task is to reach all three checkpoints which are scattered across the level. They can find these checkpoints using a black arrow located in front of them that always points in the direction of the next checkpoint. Once you reach the third checkpoint, you are automatically teleported to a totally different location with new obstacles. Free Roam mode is a little different because the checkpoints are eliminated and you now have the option of customizing your experience. This includes changing the music, changing your environment, and even changing the time of day. A fellow student Joe Barbato and I created Virtuwalk within a 24 hour time frame, and were awarded third place in the Workwave Hackathon for our efforts. The hackathon took place on the Monmouth University campus in Howard Hall. We were both freshmen when we participated.

Vizi is a next generation visualization engine designed to allow people to see things in entirely new ways. Vizi utilizes the power of virtual reality to completely change the way you interface with 3D models. The demo was developed using the Oculus Rift and Leap Motion and it allowed the user to control the experience naturally, using their voice and their hands. They could see different 3D models and interact with them in unique ways.

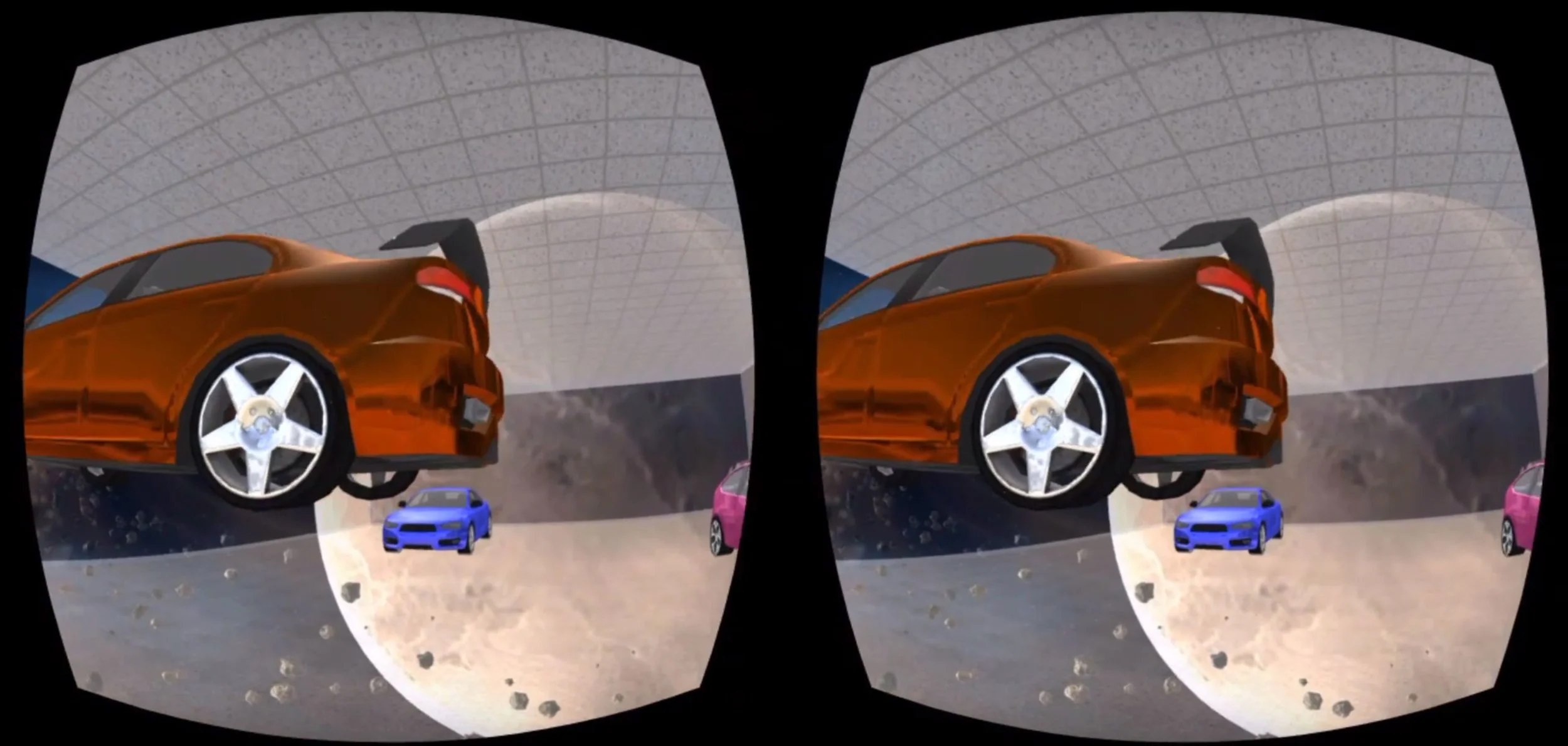

Showroom In Space is an application I developed for the Gear VR virtual reality headset. It was created for honors middle school students at Indian Valley Middle School to experience virtual reality for the first time. It's a virtual reality car showroom which allows you to change the color of the paint on the cars, make them levatate, rotate, and view them from every angle, and also sit inside of them for fun. You can also change the environment around you, as well as change the music and change the lighting (light/dark)

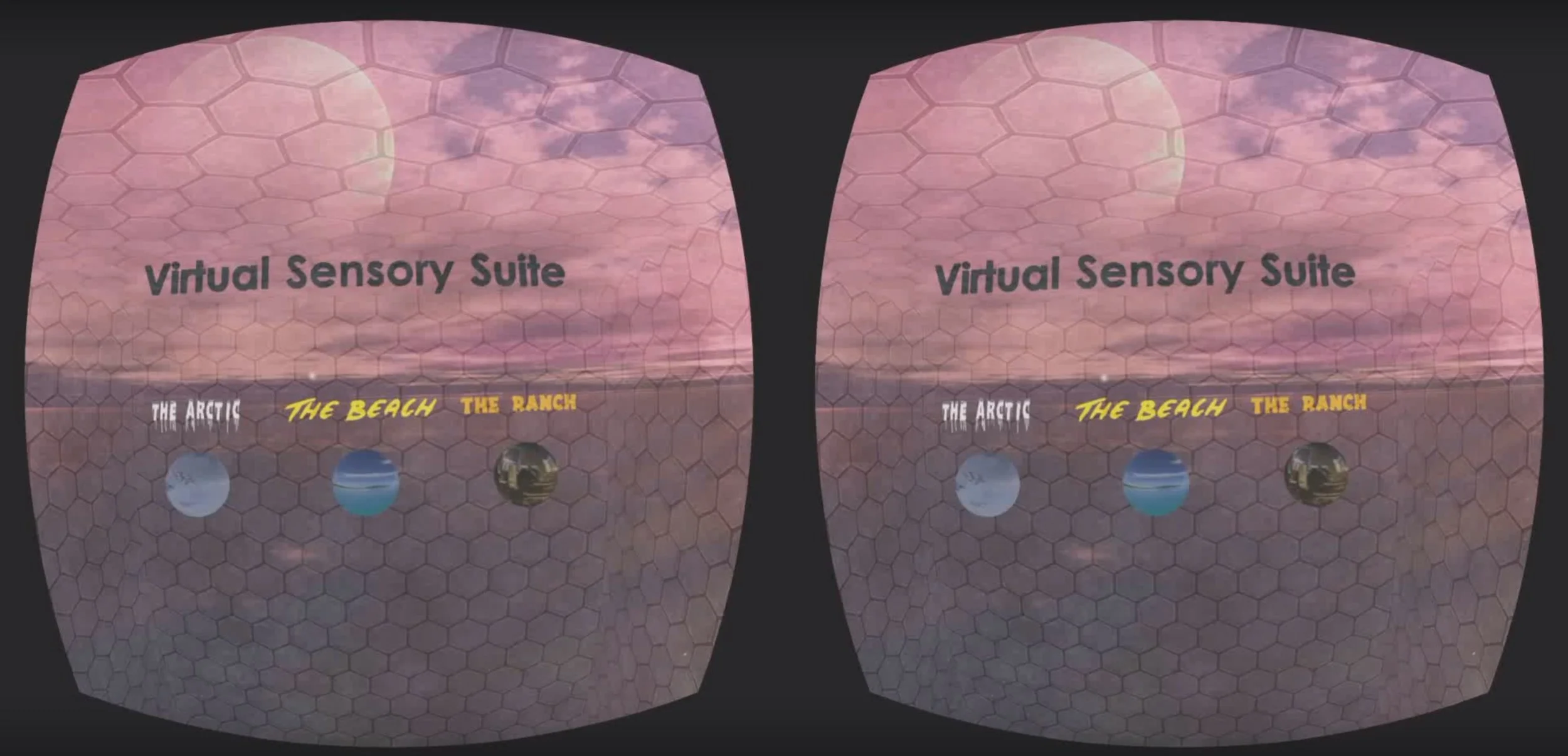

Virtual Sensory Suite is a virtual reality experience designed to provide people with various brain disorders the opportunity to feel familiar emotions and recall memories from their past. Using the Samsung Gear VR, people, users can travel to The Arctic, The Beach, or The Ranch. In these environments, there will be ways to interact, such as picking up objects, moving to different areas, and changing the time of day. All you need to know is how to use the touchpad on the side of the device to do these things. Along with immersive visuals, there is unique audio to further invoke such emotions. I've developed several virtual reality experiences, but never something that has the ability to change people's lives. However, with Virtual Sensory Suite, I am confident that I will accomplish this very task. For example, let's say you are married to someone with Alzheimer's disease. They have forgotten many things about you. You wish they would remember just one thing, to know that they still love you. You have this crazy idea to put a virtual reality headset on them and let them experience Sensory Suite. You hear nothing from them for 30 minutes, until finally they start to smile. They take off the headset, and start singing you your favorite song. You're in tears. This is the kind of impact sensory integration therapy can have on people. With Virtual Sensory Suite, you will be able to feel emotions that you wouldn't be able to feel on a regular basis which can have a huge effect on your emotional state as well as your cognitive ability. The app was developed for the Oculus Mobile VR Jam 2015.

As a senior in high school, I developed a virtual tour application for Hewlett Packard that allowed users to virtually "walk" around the HP Discover Conference. It was developed for the Google Cardboard virtual reality headset.

Percipio is a virtual reality study tool that fully immerses you in the classroom, therefore making it easier to perceive, learn, and understand new information. It is clinically proven that it is easier to remember information when you associate it with an experience, and that's exactly what Percipio allows you to do. It was created entirely using the Unity game engine and is compatible with the Oculus DK1 virtual reality headset and the Razer Hydra motion controllers.